Information Epidemic: Susceptibility, Dissemination and Immunity of False Information

Original Linden jizhi club

introduction

The spread of false information has posed a considerable threat to public health and the successful control of global epidemics. Studies have found that exposure to false information may reduce the willingness to vaccinate and comply with public health guidelines. The review paper published in Nature Medicine on March 10th summarized three key directions of information Infodemic: susceptibility, transmission and immunity. It is found that although people will be deceived by false information because of negligence of information accuracy, social and political beliefs and identity structure will also affect whether it is easy to believe false information. This paper further discusses the spread of false information in social networks, and the measures to improve psychological immunity against false information. Sander van der Linden is a professor of psychology at Cambridge University, UK. His research field is social and public psychology. This paper is a full-text translation of the thesis.

Research fields: information epidemic, network communication model, false information, COVID-19 rumors, psychological prevention.

Sander van der Linden | Author

Guo Ruidong | translator

Liu Zhihang and Liang Jin | Review

Deng Yixue | Editor

Thesis title:

Misinformation: susceptibility, spread, and interventions to immunize the public

Paper link:

https://www.nature.com/articles/s41591-022-01713-6

catalogue

abstract

Introduction to false information research

I. Susceptibility

Second, communication

Third, immunity

summary

abstract

The spread of false information has posed a considerable threat to public health and the successful control of global epidemics. Studies have found that exposure to false information may reduce the willingness to vaccinate and comply with public health guidelines. The recent review paper of Nature Medicine summarizes three key directions of information Infodemic: susceptibility, transmission and immunity. Existing research has evaluated the following three questions: why some people are more susceptible to false information, how false information spreads in online social networks, and what intervention measures can help improve psychological immunity to false information. This paper discusses the significance of existing research to stop the information epidemic.

Introduction to false information research

At the beginning of 2020, the World Health Organization (WHO) announced that the world was falling into an information Infodemic. The information epidemic is characterized by information overload, especially full of false and misleading information. Although researchers have discussed the impact of fake news on major social events (such as political elections), the spread of false information is more likely to cause significant harm to public health, especially during the COVID-19 pandemic. For example, studies in different countries/regions show that supporting COVID-19’s false information is closely related to people’s unwillingness to follow public health guidelines [4,5,6,7], reduce vaccination and recommend vaccines to others. Experimental evidence shows that exposure to false information about vaccination will reduce the willingness of those who claim that they "will definitely accept the vaccine" by about 6%, thus weakening the group immune potential of the vaccine [8]. The analysis of social network data estimates that if there is no intervention, anti-vaccination content on social platforms such as Facebook will dominate in the next decade. Other studies have found that exposure to false information about COVID-19 is related to the increased tendency to ingest harmful substances and participate in violence. Of course, long before the COVID-19 pandemic, false information had already posed a threat to public health. The connection between the exposed MMR vaccine and autism is related to the significant decline in vaccination coverage in the UK. Listerine manufacturers lied that their mouthwash can cure the common cold for decades.False information about tobacco products has influenced people’s attitudes towards smoking. In 2014, the Ebola clinic in Liberia was attacked because people mistakenly thought that the virus was part of a government conspiracy.

In view of the unprecedented scale and speed of the spread of false information on the Internet, researchers are increasingly relying on epidemiological models to understand the spread of false news. In these models, the key focus is the reproductive number (R0)-in other words, the number of people who start publishing fake news (i.e. regenerative cases) after contacting people who have published false information (infectious individuals). Therefore, it is helpful to imagine false information as a viral pathogen, which can infect the host and spread rapidly from one person to another in a given network without physical contact. One advantage of this epidemiological method is that an early detection system can be designed to identify super communicators, so that intervention measures can be deployed in time to curb the spread of viral false information [18].

This review will provide readers with a conceptual overview of the latest literature on false information and a research agenda (Box 1), which includes three main theoretical dimensions consistent with virus analogy: susceptibility, transmission and immunity.

Box1 Future research agenda and suggestions

Research question 1: What factors make people easily misled by false information?

Better combine the accuracy drive with social, political and cultural motives to explain people’s susceptibility to wrong information.

Define, develop and validate standardized tools for assessing susceptibility to false information in general and specific fields.

Research Question 2: How does false information spread in social networks?

Draw a clearer outline of the conditions to what extent "exposure" leads to "infection", including the influence of repeated exposure, the positioning of local audience of fake news on social media, the contact with super communicators, the role of echo chamber, and the structure of social network itself.

By (1) capturing more different types of false information, and (2) linking different types of fake news risks on traditional and social media platforms, we can provide more accurate risk estimation of false information exposure at the population level.

Research question 3: Can we take measures for people or protect them from false information?

Focus on evaluating the relative effectiveness of different debunking methods in this field, and how to combine debunking (therapeutic) with preventive intervention measures to maximize its protection performance.

Modeling and evaluating how psychological inoculation (intervention) methods spread on the Internet and affect the sharing behavior on social media and in the real world.

Before reviewing the existing literature to help answer these questions, it is necessary to briefly discuss the meaning of the word "misinformation", because inconsistent definitions not only affect the conceptualization of research design, but also affect the nature and effectiveness of key outcome measurement. In fact, false information is called an all-encompassing concept [20], not only because of different definitions, but also because the behavioral consequences to public health may vary according to the types of false information. The word "fake news" is often considered problematic because it does not fully describe all kinds of false information, and it has become a politicized rhetorical device. Box 2 discusses the different academic definitions of false information in more detail, but for the purpose of this review, I will simply define false information in the broadest possible sense: "false or misleading information disguised as legitimate news", regardless of its intention. Although false information is usually different from false information, because it involves obvious intention to deceive or hurt others, and the intention may be difficult to determine, so in this review, my handling of false information will cover both intentional and unintentional forms of false information.

The Challenge of Box2 in Defining and Manipulating False Information

One of the most frequently cited definitions of false information is "fabricated information that imitates the content of news media in form, but does not imitate it in organizational process or intention" [119]. This definition means that the important factor that determines whether a story is false information is the news or editing process. Other definitions also reflect similar views, that is, producers of false information do not abide by editing norms, and the defining attribute of "falsity" occurs at the publisher level, not at the story level. However, others hold a completely different view, and they define false information either from the perspective of the authenticity of the content or from the perspective of whether there are common technologies used to make the content [109].

It can be said that some definitions are too narrow, because news reports do not need to be completely wrong to be misleading. A very prominent example comes from the Chicago Tribune, a widely trusted media, which republished an article entitled "A healthy doctor died two weeks after COVID-19 vaccine injection" in January 2021. This story will not be classified as false because of its source or content, because these events are true when considered separately. However, at the time of publication, there is no evidence to prove this causal relationship, so it is extremely misleading and even considered immoral to think that the doctor died because he was injected with COVID-19 vaccine. This is an unremarkable example. In early 2021, it was viewed more than 50 million times on Facebook [121].

Another potential challenge to the definition based on content is that when the consensus of experts on a public health problem is rapidly formed and influenced by uncertainty and change, the definition of what may be true or false may change over time, making the oversimplified classification of "true" and "false" a potentially unstable attribute. For example, although the news media initially claimed that ibuprofen would add symptoms of re-crown, this statement was later withdrawn as more evidence appeared. The problem is that researchers often ask people whether they can accurately or reliably identify a series of true or false news headlines, which are either created by researchers according to different definitions of false information or screened by them.

There are also differences in the measurement of the results; Sometimes, the relevant result measurement standard is the susceptibility of false information, and sometimes it is the difference between true and false news detection, or the so-called "truth recognition". The only existing tool to use the title set verified by psychometrics is the recent "false information susceptibility test", which is a measurement method for news authenticity recognition and standardized according to the test group. On the whole, this means that hundreds of new special studies on false information are emerging, and the results are not standardized and not always easy to compare.

I. Susceptibility

Although people use many cognitive heuristics to judge the truth of a claim (for example, the credibility of the perceived source), there is a particularly prominent discovery that can help explain why people are easily influenced by false information. This discovery is called the "illusory truth effect": repeated assertions are more likely to be judged as true than non-repeated (or novel) assertions. Because mass media, politicians and social media influencers often repeat many false facts, the credibility of illusory truth has greatly increased. For example, the conspiracy theory that Covid-19 was made by bioengineering in a military laboratory in Wuhan, China, and the false statement that "COVID-19 is no worse than the flu" have repeatedly appeared in the media. People tend to think that repeated claims are correct. The main cognitive mechanism is called processing fluency: the more a claim is repeated, the more familiar it becomes and the easier it is to deal with. In other words, the brain uses fluency as a signal to show the truth. The research shows that: (1) prior contact with fake news will increase its perceived accuracy; (2) For specious claims, illusory truth may appear; (3) Transcendental knowledge may not protect people from illusory truth; (4) Unreal truth does not seem to be influenced by the way of thinking, such as analytical or intuitive reasoning.

Although illusory truth can affect everyone, research shows that some people are still more susceptible to false information than others. For example, some common findings include the observation that the elderly are more susceptible to fake news, which may be caused by factors such as cognitive decline and unfamiliarity with digital technology, although there are exceptions: facing COVID-19, the elderly seem unlikely to recognize false information. Those with more extreme and right-wing political tendencies have always shown that they are more susceptible to false information, even if it is non-political. However, in different cultural backgrounds, the connection between ideology and being misled is not always consistent. Other factors, such as higher numerical operation ability, cognitive and analytical thinking mode [36,40,41], are negatively related to the susceptibility to false information, although other scholars have identified partisanship as a potential regulatory factor [42,43,44]. In fact, these individual differences lead to two competing overall theoretical explanations of why people are easily misled. The first theory is usually called the classic "negligence" (inattention) theory; The second theory is usually called "identity protection" or "motivational cognition" theory. I will discuss the new evidence of these two theories in turn.

1.1 negligence explanation

Inattention account or classical reasoning theory holds that people are committed to sharing accurate content, but the social media environment will distract people’s attention and make them unable to make a decision to share news according to their preference for accuracy. For example, considering that people are often bombarded by online news content, most of which are emotional and political, and people have limited time and resources to think about the authenticity of a news, it may seriously interfere with their ability to accurately analyze these contents. The explanation of inattention draws lessons from the dual-process theory of human cognition, that is, people rely on two essentially different reasoning processes. They are system 1, which is mainly automatic, associative and intuitive, and system 2, which is more reflective, analytical and thoughtful. A typical example is the Cognitive Reflection Test (CRT), which gives a series of difficult problems. When faced with these difficult problems, people’s intuition or first answer is often wrong, and the correct answer requires people to stop and think more carefully. The basic point is that activating more analytical system type 2 reasoning can transcend the wrong system type 1 intuition. The evidence of negligent explanation comes from the fact that those who scored higher on CRT tests [36,41], those with stronger thinking ability [48] and those with stronger mathematical ability [4],People with higher knowledge and education [37,49] can better distinguish between true and false news [36] regardless of whether the news content is consistent politically. In addition, experimental intervention measures enable people to better think analytically or consider the accuracy of news content [50,51]. It has been proved that these measures can improve people’s decision-making quality of sharing news and reduce people’s acceptance of conspiracy theories [52].

1.2 Motivation reasoning explanation

In sharp contrast to negligence, the theory of (political) motivation reasoning [53,54,55] holds that lack of information or reflective reasoning is not the main driving force for susceptibility to false information. Motivational reasoning occurs when a person starts the reasoning process with a predetermined goal (for example, someone may want to believe that the vaccine is unsafe because their family members share this belief), so the individual will interpret new (false) information to achieve that goal. Therefore, the motivated reasoning account holds that people’s loyalty to groups that have affinity with them is the reason why they selectively approve media content that can strengthen deep-rooted political, religious or social identity. There are several variants of politically motivated reasoning, but the basic premise is that people pay attention not only to the accuracy of news content, but also to the goals that this information may serve. For example, when a fake news happens to provide positive information about someone’s political group or negative information about a political opponent, the news will be regarded as more credible. A more extreme and scientifically controversial version of this model, also known as "motivated numeracy" [59], shows more reflection and analysis, that is, the reasoning ability of System 2 can’t help people make more accurate assessments, but in fact it is often hijacked to serve identity-based reasoning.The evidence of this statement comes from the fact that on controversial scientific issues, such as climate change [60] or stem cell research [61], people with the highest computing ability and education level have the greatest differences. Experiments also show that when people are asked to make causal inferences about a data problem (such as the benefits of a new rash treatment), people with strong computing skills perform better on non-political issues. In contrast, when the same data is presented as a new research result of gun control, people become more extreme and inaccurate. These patterns are more obvious in people with higher computing power. Other studies have found that politically conservative individuals are more likely to (wrongly) judge false information from conservative media than from free media, and vice versa for political liberals-which highlights the key role of politics in distinguishing the truth from the false information [62].

1.3 Susceptibility: Limitations and Future Research

It is worth mentioning that both of these statements are facing great criticism and restrictions. For example, the independent replication experiment of intervention measures aimed at improving accuracy revealed different results [63], and questioned the conceptualization of partisan bias in these studies [43], including the possibility that the intervention effect was influenced by people’s political identity [44]. On the other hand, there are several failed and inconsistent repeated experiments in motivation reasoning theory [64,65,66]. For example, a national representative large-scale study in the United States shows that although the polarization of global warming among party members with the highest education level at the baseline level is indeed the most serious, by emphasizing the scientific consensus on global warming [66], experimental intervention measures offset or even reverse this influence. These findings lead to a greater confusion. In the literature of motivational reasoning, partisanship may only be due to selective contact, not motivational reasoning [66,67,68]. This is because the role of politics is confused with people’s previous beliefs. Although people are polarized on many issues, it does not mean that they are unwilling to update their (misguided) beliefs based on evidence. In addition, people may refuse to update their beliefs, not because they refuse the motivation reasoning of information, but simply because they think that the information is not credible, or because they ignore the authenticity of the information source or the content itself. This "equivalence paradox"[69] makes it difficult for us to separate the accuracy from the preference based on motivation.

Therefore, future research should not only carefully manipulate people’s motivation to deal with politically inconsistent (false) information, but also provide a more comprehensive theoretical explanation of the susceptibility to false information. For example, for political fake news, identity motivation may be more prominent; However, mechanisms such as lack of knowledge, negligence or confusion are more likely to play a role in dealing with false information about depoliticized issues (such as lies about treating the common cold). Of course, public health issues such as Covid-19 may be politicized relatively quickly. In this case, the importance of motivational reasoning in aggravating the susceptibility to false information may increase. Accuracy preference and motivation reasoning often conflict. For example, people may understand that a news story is unlikely to be true, but if false information promotes the goals of their social groups, they may be more inclined to give up their desire for accuracy and pursue motives that conform to their community norms. In other words, in any particular context, the importance people attach to accuracy and social goals will determine how and when they update their beliefs based on false information. When explaining why people are easily influenced by false information, paying attention to the interaction between accuracy and social and political goals can gain many benefits.

Second, communication

2.1 Measuring the information epidemic

Back to the analogy with viruses, researchers adopted epidemiological models, such as susceptibility-infection-rehabilitation (SIR) model, to measure and quantify the spread of false information in online social networks. In this case, R0 often represents those who start publishing fake news after contacting people who have been infected. When R0 is greater than 1, false information will increase exponentially and spread to form an information epidemic. When R0 is less than 1, the information epidemic will eventually die out. The analysis of social media platforms shows that all these platforms are likely to promote the spread of similar information epidemics, but some platforms are more likely than others. For example, research on Twitter found that fake news is 70% more likely to be shared than real news, and it takes six times as long for real news to spread to 1,500 people. Although fake news spreads faster and deeper than real news, it must be emphasized that these findings are based on a relatively narrow definition of news after fact checking. Recent studies have pointed out that these estimates are likely to be related to the platform.

More importantly, some studies have shown that fake news usually represents only a small part of all media consumption, and the spread of false information on social media is highly distorted. A small number of accounts are responsible for sharing and consuming most of the content, and these accounts are also called "super sharers" and "super consumers" [3,24,73]. Although most of these studies came from the political field, they also found very similar results in the context of COVID-19’s popularity. During this period, the super communicators on Twitter and Facebook had a great influence on the platform. One of the main problems is the existence of echo chamber, in which the flow of information is often systematically biased towards like-minded people. Although the infection of echo chamber is controversial, the research shows that the existence of this polarized group encourages the spread of false information and hinders the spread of error correction information.

2.2 Contact does not mean infection.

Contact estimation based on social media data is often inconsistent with people’s self-reported experiences. According to different opinion polls, more than one third of people report frequent contact with false information (if not daily contact). Of course, the effectiveness of people’s self-reported experiences may be different, but it raises the question of the accuracy of contact estimation, which is often based on limited public data and may be sensitive to model assumptions. In addition, a key factor to consider here is that contact does not mean persuasion (or "infection"). For example, the study of news headlines in COVID-19 shows that people’s judgment on the authenticity of headlines has little influence on their sharing intention. Therefore, people may choose to share false information for reasons other than accuracy. For example, a recent study found that people often share content that looks "if it is really interesting". This study shows that although people think fake news is not accurate enough, they think fake news is more interesting than real news, so they are willing to share it.

2.3 Communication: Limitations and Future Research

The research on "communication" faces great limitations, including the key gap in knowledge. People are skeptical about the ratio from contact with false information to beginning to truly believe false information, because the research on media and persuasion effect shows that it is difficult to convince people with traditional advertisements. However, the existing research often uses artificial laboratory designs, which may not fully represent the decision-making environment for people to make news sharing. For example, studies often test whether false information from different societies and traditional media spreads after one-time contact with a single information. Therefore, we need to better understand the frequency and intensity of contact with false information that eventually leads to persuasion. Most studies also rely on publicly available data shared or clicked by people, but people may be exposed to more information and more influenced by information when scrolling social media streams. In addition, fake news is usually conceptualized as a URL list that is verified to be true or false by facts, but this type of fake news only represents a small part of false information; People may be more likely to encounter misleading or manipulative content than obvious false content. Finally, micro-targeting efforts will greatly improve the ability of false information makers to identify and lock in the most easily persuaded individual subgroups [83]. In short, more research is needed to draw an accurate and effective conclusion on the possibility of infection (that is, persuasion) caused by horizontal exposure to false information.

Third, immunity

A rapidly emerging research direction is to evaluate the possibility of protecting the public from false information at the cognitive level. I will classify these studies according to whether their application is mainly prevention (pre-exposure) or treatment (post-exposure).

3.1 Treatment: fact checking and revealing the truth.

Traditional and standard methods to deal with false information usually include correcting lies after people have been persuaded by a piece of false information. For example, revealing false information about autism intervention measures has been proved to be effective in reducing support for treatments without evidence support (such as dieting) [84]. Access to the court-mandated corrective advertisements on the link between smoking and diseases in the tobacco industry [85] can increase knowledge and reduce misunderstandings about smoking. In a randomized controlled trial, a video effectively debunks several misunderstandings about vaccination and reduces some influential misunderstandings, such as the misconceptions that vaccines can cause autism or reduce the strength of the natural immune system. Meta-analysis unanimously found that the intervention measures of fact checking and revealing the truth are effective, including cracking down on false information that is harmful to health on social media.

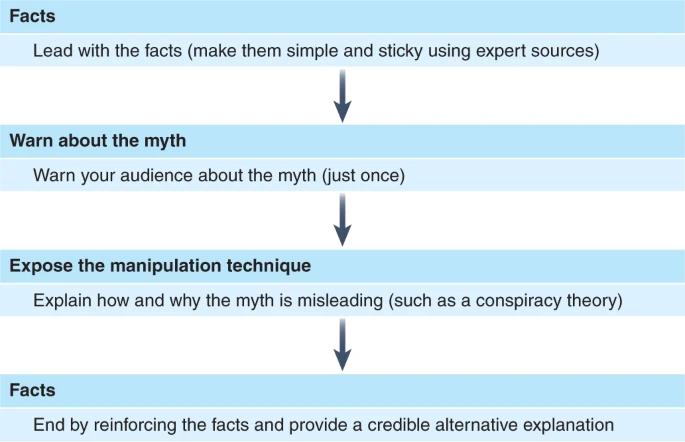

However, not all medical misunderstandings can be corrected equally. In fact, these analyses also point out that the effectiveness of intervention will decline significantly with the following factors: (1) the quality of refutation, (2) the passage of time, and (3) previous beliefs and ideologies. For example, the above-mentioned studies on autism and smoking correction advertisements were ineffective after 1 week and 6 weeks of follow-up, respectively. When designing corrections, it is usually not enough to simply mark information as false or incorrect, because correcting false information through simple withdrawal can’t make people understand why information is false and what the facts are. Therefore, the advice to practitioners is often to write more detailed materials to expose the truth. A review of the literature shows that [91,92], the best practices of designing and debunking false information include:

1. Tell the truth first;

2. Appeal to scientific consensus and authoritative expert resources;

3. Ensure that the correction is easy to obtain and is no more complicated than the original false information;

4. Clearly explain why false information is false;

5. Provide a coherent alternative causal explanation (Figure 1).

Although there is a general lack of comparative research, some recent studies show that optimizing the way to expose information according to these criteria will improve the effect compared with other or business-as-usual methods [84].

Figure 1. Best practice suggestions for effectively exposing false information. An effective information to expose the truth should start with facts and be presented in a simple and unforgettable way. Then you should warn the audience about false information (don’t repeat this false information). Then identify and expose the manipulation techniques used to mislead people. Finally, repeat the facts and emphasize the correct explanation.

3.2 Revealing the Truth: Limitations and Future Research

Despite these advances, people still express great concern about the application of this kind of "therapeutic" correction afterwards, especially the risk of "backfire effect", and people eventually believe more false information because of the correction. This counterproductive effect can occur through two potential dimensions [92,93], one of which involves the psychological response to correction itself (backfire effect of "world outlook"), and the other involves the repetition of false information (backfire effect caused by "familiarity"). Although early research supports the fact that, for example, correcting false information about influenza and MMR vaccine may make individuals who have been concerned about these things more hesitant about the decision to vaccinate, recent research has found no evidence to prove the backfire effect of this world view. In fact, although the evidence of backfire effect is still widely cited, recent repeated experiments failed to reproduce this effect when correcting false information about vaccines. Therefore, although this effect may exist, its frequency and intensity are not as common as previously thought.

We can also design a way that is consistent with the audience’s worldview rather than conflicting to expose false information, so as to minimize the backfire effect at the worldview level. Nevertheless, because exposing lies means imposing a rhetorical framework on the audience, in this framework, in order to correct false information, it is necessary to repeat these false information (that is, refute other people’s statements), so there is a risk that this repetition will enhance people’s familiarity with false information, and people will not be able to correct it in long-term memory later. Although studies clearly show that people are more likely to believe repeated (false) information than non-repeated (false) information, recent studies have found that the risk of ironically strengthening a false information is relatively small when exposing a lie, especially when the information that exposes the lie is highlighted relative to the false information. Therefore, the current consensus is that although practitioners should be aware of the risk of backfire, considering that these side effects are rare, they should not prevent the release of correction information.

Having said that, there are two other noteworthy problems that limit the effectiveness of the treatment. First of all, retrospective correction will not be known to as many people as the initial false information. For example, it is estimated that only about 40% of smokers have received the correction information ordered by the tobacco industry court [98]. Another related concern is that people will continue to make inferences based on lies even after receiving the corrected information [92]. This phenomenon is called "persistent influence of false information", and meta-analysis has found strong evidence of persistent influence effect in a wide range of situations [88,89].

3.3 Preventive Measures: Psychological Prevention Theory of False Information

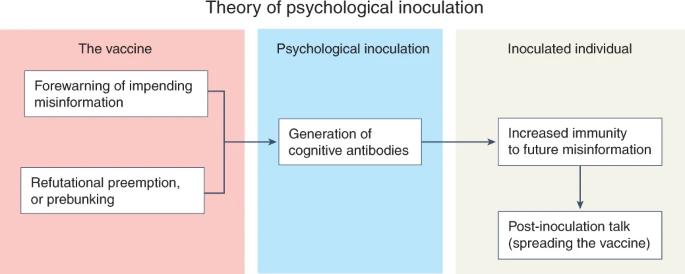

Therefore, researchers have recently begun to explore preventive or preemptive measures to deal with false information, that is, to act before individuals come into contact with or reach the "infectious" state. Although "precaution" is a more general term, it is used to refer to the intervention measures that remind people to "think twice before publishing events" [51], but this self-reminding does not enable people to have any new skills to identify and resist false information. The most common framework to prevent harmful persuasion is psychological prevention theory (Figure 2)[100,101].

The theory of psychological prevention follows the medical analogy and assumes that just as vaccines trigger antibody production to help acquire immunity against future infections, this can also be achieved at the information level. By pre-emptive warning and exposing people to seriously weakened false information (plus strong refutation), people can cultivate cognitive resistance to future false information. Psychological prevention theory plays a role through two mechanisms, namely, (1) motive threat (a desire to protect oneself from manipulation attack) and (2) refuting pre-emptive strike (an example of attack weakened by prior contact). For example, the study found that vaccinating people before (rather than after) exposure to conspiracy theories to prevent conspiracy arguments about vaccines effectively increased the willingness to vaccinate. Recent reviews [102,104] and meta-analysis [105] point out that psychological prevention is a powerful strategy, which can gain immunity to false information, and has many applications in the health field, such as helping people form immunity to false information of mammography in breast cancer screening.

Figure 2. Psychological prevention includes two core components: (1) warning people in advance that they may be misled (activating the psychological "immune system"); (2) Exposing false information (strategy) in advance, exposing people to seriously weakened false information, coupled with strong counterattack and refutation (producing cognitive "antibodies"). Once people gain immunity, they can indirectly spread the "vaccine" to others through offline and online interaction.

In particular, some recent progress is worth noting. First of all, the field of psychological prevention has shifted from narrow-spectrum or fact-based prevention to broad-spectrum or technology-based immunity [102,108]. The reason behind this change is that although we can synthesize a seriously weakened false information from the existing false information (and then strongly refute this weakened information), it is difficult to expand the scale of psychological prevention if this process must be repeated for every false information. On the contrary, scholars have begun to identify the common components of false information more generally, including impersonating experts and doctors, manipulating people’s emotions with fear, and using conspiracy theories and other techniques. It is found that people can resist these potential routines through psychological prevention, so people’s immunity will be relatively enhanced for a series of false information using these strategies. This process is sometimes called cross-protection. Inoculating people with a strain can prevent related or different strains from adopting the same false information strategy.

The second progress is about the application of active prevention and passive prevention. The traditional prevention process is passive, because people will get specific rebuttal information from experimenters in advance, while the active prevention process encourages people to produce their own "antibodies". Perhaps the most famous example of active prevention is the popular gamification prevention intervention, such as in the games Bad News and GoViral! In [110], the player plays the role of a false information producer, and is influenced by common strategies used to spread false information in a simulated social media environment. As part of this process, players actively generate their own media content and reveal manipulation techniques. It is found that when people (1) realize that they are easily persuaded and (2) perceive improper intentions to manipulate their opinions, they will resist deception. Therefore, these games aim to reveal the vulnerability of people’s cognition and stimulate the spontaneity of individuals by contacting with weak doses of false information in advance, so as to protect themselves from the influence of false information. Randomized controlled trials have found that active prevention games can help people identify false information [38,110,113,114], enhance people’s confidence and insight into the truth [110,113], and reduce the sharing of false information reported by themselves. However, like many biological vaccines, studies have found that psychological immunity will weaken over time, but it can be maintained for several months through regular "booster shots". One of the benefits of this research is that as a member of the World Health Organization,As part of the "Stop The Spread" campaign and the "Verified" initiative of the United Nations and the British government, these gamification interventions have been evaluated and promoted by millions of people.

3.4 Preventive Measures: Limitations and Future Research

One potential limitation is that although false information has appeared repeatedly throughout history (considering the similarity between the false information that vaccinia vaccine will turn people into cattle hybrids and the conspiracy theory that COVID-19 vaccine will change human DNA), psychological prevention really needs at least some advance knowledge about the false information that people may come into contact with in the future. In addition, because medical workers are being trained to fight against false information, it is important to avoid terminology confusion when fighting against vaccine suspicion through psychological prevention. For example, this method can be implemented without a clear analogy with vaccination, focusing on the value of "prevention" and helping people expose manipulation techniques.

Several other important open problems still exist. For example, similar to the recent progress in the application of therapeutic vaccines in experimental medicine: therapeutic vaccines can still enhance the immune response after infection-studies have found that psychological prevention can still protect people from false information even if they have been exposed to false information [108,112,118]. This is conceptually meaningful, because it shows that it may take a long time to repeatedly contact with false information before it can be completely convinced by false information or integrated with previous attitudes. However, there is still no clear conceptual boundary between the transition from therapeutic vaccination to traditional debunking the truth.

In addition, although active prevention and passive prevention are relatively close [105,110], the evidence base of active prevention is still relatively small. Similarly, although studies comparing prevention with debunking the truth show that prevention is indeed better than post-treatment treatment, more comparative studies are needed. The study also found that it is possible for people to post information about psychological prevention on interpersonal or social media. This process is called "post-inoculation talk" [104], which implies the possibility of group immunity in online communities [110], but there is no social network simulation to evaluate the potential of psychological prevention. The current research is also based on self-reported false information sharing. Future research needs to evaluate the extent to which psychological prevention can spread among people and affect the objective news sharing behavior on social media.

summary

The spread of false information undermines the efforts of public health work, from vaccination to public compliance with health protection behaviors. It is found that although people are sometimes deceived by misleading information because of their negligence and insufficient attention to the accuracy of information on social media, the politicized nature of many public health problems shows that people will also strengthen important social and political beliefs and identity structures by doing so, thus believing and sharing false information. We need a more comprehensive framework, which is sensitive to different backgrounds and can explain different susceptibility to false information according to how people give priority to accuracy and social motivation when judging the authenticity of news media. Although "exposure" does not mean "infection", false information can spread rapidly on the Internet, and its spread often benefits from the existence of political echo room. However, it is important that a lot of false information on social media often comes from influential accounts and super communicators. Both therapeutic methods and preventive methods have proved that some success has been achieved in fighting against false information. However, in view of the persistent influence after exposure to false information, preventive methods are of great value, and more research is needed to determine how to best combine exposure and prevention. We should also encourage further research to outline the psychological principles and potential challenges behind the application of epidemiological models to understand the spread of false information. A major challenge in this field in the future will be to clearly define how to measure and conceptualize false information, and to need standardized psychometric tools to better compare the results of various studies.

references

Zarocostas, J. How to fight an infodemic. Lancet 395, 676 (2020).

Allcott, H. & Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 31, 211–236 (2020).

Grinberg, N. et al. Fake news on Twitter during the 2016 US presidential election. Science 363, 374–378 (2019).

Roozenbeek, J. et al. Susceptibility to misinformation about COVID-19 around the world. R. Soc. Open Sci. 7, 201199 (2020).

Romer, D. & Jamieson, K. H. Conspiracy theories as barriers to controlling the spread of COVID-19 in the US. Soc. Sci. Med. 263, 113356 (2020).

Imhoff, R. & Lamberty, P. A bioweapon or a hoax? The link between distinct conspiracy beliefs about the coronavirus disease (COVID-19) outbreak and pandemic behavior. Soc. Psychol. Personal. Sci. 11, 1110–1118 (2020).

Freeman, D. et al. Coronavirus conspiracy beliefs, mistrust, and compliance with government guidelines in England. Psychol. Med. https://doi.org/10.1017/S0033291720001890 (2020).

Loomba, S. et al. Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat. Hum. Behav. 5, 337–348 (2021).

Johnson, N. et al. The online competition between pro-and anti-vaccination views. Nature 58, 230–233 (2020).

Aghababaeian, H. et al. Alcohol intake in an attempt to fight COVID-19: a medical myth in Iran. Alcohol 88, 29–32 (2020).

Jolley, D. & Paterson, J. L. Pylons ablaze: examining the role of 5G COVID‐19 conspiracy beliefs and support for violence. Br. J. Soc. Psychol. 59, 628–640 (2020).

Dubé, E. et al. Vaccine hesitancy, vaccine refusal and the anti-vaccine movement: influence, impact and implications. Expert Rev. Vaccines 14, 99–117 (2015).

Armstrong, G. M. et al. A longitudinal evaluation of the Listerine corrective advertising campaign. J. Public Policy Mark. 2, 16–28 (1983).

Albarracin, D. et al. Misleading claims about tobacco products in YouTube videos: experimental effects of misinformation on unhealthy attitudes. J. Medical Internet Res. 20, e9959 (2018).

Krishna, A. & Thompson, T. L. Misinformation about health: a review of health communication and misinformation scholarship. Am. Behav. Sci. 65, 316–332 (2021).

Kucharski, A. Study epidemiology of fake news. Nature 540, 525–525 (2016).

Cinelli, M. et al. The COVID-19 social media infodemic. Sci. Rep. 10, 1–10 (2020).

Scales, D. et al. The COVID-19 infodemic—applying the epidemiologic model to counter misinformation. N. Engl. J. Med 385, 678–681 (2021).

Vraga, E. K. & Bode, L. Defining misinformation and understanding its bounded nature: using expertise and evidence for describing misinformation. Polit. Commun. 37, 136–144 (2020).

Southwell et al. Misinformation as a misunderstood challenge to public health. Am. J. Prev. Med. 57, 282–285 (2019).

Wardle, C. & Derakhshan, H. Information Disorder: toward an Interdisciplinary Framework for Research and Policymaking. Council of Europe report DGI (2017)09 (Council of Europe, 2017).

van der Linden, S. et al. You are fake news: political bias in perceptions of fake news. Media Cult. Soc. 42, 460–470 (2020).

Tandoc, E. C. Jr et al. Defining ‘fake news’ a typology of scholarly definitions. Digit. J. 6, 137–153 (2018).

Allen, J. et al. Evaluating the fake news problem at the scale of the information ecosystem. Sci. Adv. 6, eaay3539 (2020).

Marsh, E. J. & Yang, B. W. in Misinformation and Mass Audiences (eds Southwell, B. G., Thorson, E. A., & Sheble, L) 15–34 (University of Texas Press, 2018).

Dechêne, A. et al. The truth about the truth: a meta-analytic review of the truth effect. Pers. Soc. Psychol. Rev. 14, 238–257 (2010).

Lewis, T. Eight persistent COVID-19 myths and why people believe them. Scientific American. https://www.scientificamerican.com/article/eight-persistent-covid-19-myths-and-why-people-believe-them/ (2020).

Wang, W. C. et al. On known unknowns: fluency and the neural mechanisms of illusory truth. J. Cogn. Neurosci. 28, 739–746 (2016).

Pennycook, G. et al. Prior exposure increases perceived accuracy of fake news. J. Exp. Psychol. Gen. 147, 1865–1880 (2018).

Fazio, L. K. et al. Repetition increases perceived truth equally for plausible and implausible statements. Psychon. Bull. Rev. 26, 1705–1710 (2019).

Fazio, L. K. et al. Knowledge does not protect against illusory truth. J. Exp. Psychol. Gen. 144, 993–1002 (2015).

De Keersmaecker, J. et al. Investigating the robustness of the illusory truth effect across individual differences in cognitive ability, need for cognitive closure, and cognitive style. Pers. Soc. Psychol. Bull. 46, 204–215 (2020).

Guess, A. et al. Less than you think: prevalence and predictors of fake news dissemination on Facebook. Sci. Adv. 5, eaau4586 (2019).

Saunders, J. & Jess, A. The effects of age on remembering and knowing misinformation. Memory 18, 1–11 (2010).

Brashier, N. M. & Schacter, D. L. Aging in an era of fake news. Curr. Dir. Psychol. Sci. 29, 316–323 (2020).

Pennycook, G. & Rand, D. G. Lazy, not biased: susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition 188, 39–50 (2019).

Imhoff, R. et al. Conspiracy mentality and political orientation across 26 countries. Nat. Hum. Behav. https://doi.org/10.1038/s41562-021-01258-7 (2022).

Roozenbeek, J. & van der Linden, S. Fake news game confers psychological resistance against online misinformation. Humanit. Soc. Sci. Commun. 5, 1–10 (2019).

Van der Linden, S. et al. The paranoid style in American politics revisited: an ideological asymmetry in conspiratorial thinking. Polit. Psychol. 42, 23–51 (2021).

De keersmaecker, J. & Roets, A. ‘Fake news’: incorrect, but hard to correct. The role of cognitive ability on the impact of false information on social impressions. Intelligence 65, 107–110 (2017).

Bronstein, M. V. et al. Belief in fake news is associated with delusionality, dogmatism, religious fundamentalism, and reduced analytic thinking. J. Appl. Res. Mem. 8, 108–117 (2019).

Greene, C. M. et al. Misremembering Brexit: partisan bias and individual predictors of false memories for fake news stories among Brexit voters. Memory 29, 587–604 (2021).

Gawronski, B. Partisan bias in the identification of fake news. Trends Cogn. Sci. 25, 723–724 (2021).

Rathje, S et al. Meta-analysis reveals that accuracy nudges have little to no effect for US conservatives: Regarding Pennycook et al. (2020). Psychol. Sci. https://doi.org/10.25384/SAGE.12594110.v2 (2021).

Pennycook, G. & Rand, D. G. The psychology of fake news. Trends Cogn. Sci. 22, 388–402 (2021).

van der Linden, S. et al. How can psychological science help counter the spread of fake news? Span. J. Psychol. 24, e25 (2021).

Evans, J. S. B. In two minds: dual-process accounts of reasoning. Trends Cogn. Sci. 7, 454–459 (2003).

Bago, B. et al. Fake news, fast and slow: deliberation reduces belief in false (but not true) news headlines. J. Exp. Psychol. Gen. 149, 1608–1613 (2020).

Scherer, L. D. et al. Who is susceptible to online health misinformation? A test of four psychosocial hypotheses. Health Psychol. 40, 274–284 (2021).

Pennycook, G. et al. Fighting COVID-19 misinformation on social media: experimental evidence for a scalable accuracy-nudge intervention. Psychol. Sci. 31, 770–780 (2020).

Pennycook, G. et al. Shifting attention to accuracy can reduce misinformation online. Nature 592, 590–595 (2021).

Swami, V. et al. Analytic thinking reduces belief in conspiracy theories. Cognition 133, 572–585 (2014).

Kunda, Z. The case for motivated reasoning. Psychol. Bull. 108, 480–498 (1990).

Kahan, D. M. in Emerging Trends in the Social and Behavioral sciences (eds Scott, R. & Kosslyn, S.) 1–16 (John Wiley & Sons, 2016).

Bolsen, T. et al. The influence of partisan motivated reasoning on public opinion. Polit. Behav. 36, 235–262 (2014).

Osmundsen, M. et al. Partisan polarization is the primary psychological motivation behind political fake news sharing on Twitter. Am. Polit. Sci. Rev. 115, 999–1015 (2021).

Van Bavel, J. J. et al. Political psychology in the digital (mis) information age: a model of news belief and sharing. Soc. Issues Policy Rev. 15, 84–113 (2020).

Rathje, S. et al. Out-group animosity drives engagement on social media. Proc. Natl Acad. Sci. USA 118, e2024292118 (2021).

Kahan, D. M. et al. Motivated numeracy and enlightened self-government. Behav. Public Policy 1, 54–86 (2017).

Kahan, D. M. et al. The polarizing impact of science literacy and numeracy on perceived climate change risks. Nat. Clim. Chang. 2, 732–735 (2012).

Drummond, C. & Fischhoff, B. Individuals with greater science literacy and education have more polarized beliefs on controversial science topics. Proc. Natl Acad. Sci. USA 114, 9587–9592 (2017).

Traberg, C. S. & van der Linden, S. Birds of a feather are persuaded together: perceived source credibility mediates the effect of political bias on misinformation susceptibility. Pers. Individ. Differ. 185, 111269 (2022).

Roozenbeek, J. et al. How accurate are accuracy-nudge interventions? A preregistered direct replication of Pennycook et al. (2020). Psychol. Sci. 32, 1169–1178 (2021).

Persson, E. et al. A preregistered replication of motivated numeracy. Cognition 214, 104768 (2021).

Connor, P. et al. Motivated numeracy and active reasoning in a Western European sample. Behav. Public Policy 1–23 (2020).

van der Linden, S. et al. Scientific agreement can neutralize politicization of facts. Nat. Hum. Behav. 2, 2–3 (2018).

Tappin, B. M. et al. Rethinking the link between cognitive sophistication and politically motivated reasoning. J. Exp. Psychol. Gen. 150, 1095–1114 (2021).

Tappin, B. M. et al. Thinking clearly about causal inferences of politically motivated reasoning: why paradigmatic study designs often undermine causal inference. Curr. Opin. Behav. Sci. 34, 81–87 (2020).

Druckman, J. N. & McGrath, M. C. The evidence for motivated reasoning in climate change preference formation. Nat. Clim. Chang. 9, 111–119 (2019).

Juul, J. L. & Ugander, J. Comparing information diffusion mechanisms by matching on cascade size. Proc. Natl. Acad. Sci. USA 118, e210078611 (2021).

Vosoughi, S. et al. The spread of true and false news online. Science 359, 1146–1151 (2018).

Cinelli, M. et al. The echo chamber effect on social media. Proc. Natl Acad. Sci. USA 118, e2023301118 (2021).

Guess, A. et al. Exposure to untrustworthy websites in the 2016 US election. Nat. Hum. Behav. 4, 472–480 (2020).

Yang, K. C. et al. The COVID-19 infodemic: Twitter versus Facebook. Big Data Soc. 8, 20539517211013861 (2021).

Del Vicario, M. et al. The spreading of misinformation online. Proc. Natl Acad. Sci. USA 113, 554–559 (2016).

Zollo, F. et al. Debunking in a world of tribes. PloS ONE 12, e0181821 (2017).

Guess, A. M. (Almost) everything in moderation: new evidence on Americans’ online media diets. Am. J. Pol. Sci. 65, 1007–1022 (2021).

T?rnberg, P. Echo chambers and viral misinformation: modeling fake news as complex contagion. PLoS ONE 13, e0203958 (2018).

Choi, D. et al. Rumor propagation is amplified by echo chambers in social media. Sci. Rep. 10, 1–10 (2020).

Eurobarometer on Fake News and Online Disinformation. European Commission https://ec.europa.eu/digital-single-market/en/news/final-results-eurobarometer-fake-news-and-online-disinformation (2018).

Altay, S. et al. ‘If this account is true, it is most enormously wonderful’: interestingness-if-true and the sharing of true and false news. Digit. Journal. https://doi.org/10.1080/21670811.2021.1941163 (2021).

Kalla, J. L. & Broockman, D. E. The minimal persuasive effects of campaign contact in general elections: evidence from 49 field experiments. Am. Political Sci. Rev. 112, 148–166 (2018).

Matz, S. C. et al. Psychological targeting as an effective approach to digital mass persuasion. Proc. Natl Acad. Sci. USA 114, 12714–12719 (2017).

Paynter, J. et al. Evaluation of a template for countering misinformation—real-world autism treatment myth debunking. PloS ONE 14, e0210746 (2019).

Smith, P. et al. Correcting over 50 years of tobacco industry misinformation. Am. J. Prev. Med 40, 690–698 (2011).

Yousuf, H. et al. A media intervention applying debunking versus non-debunking content to combat vaccine misinformation in elderly in the Netherlands: a digit al randomised trial. EClinicalMedicine 35, 100881 (2021).

Walter, N. & Murphy, S. T. How to unring the bell: a meta-analytic approach to correction of misinformation. Commun. Monogr. 85, 423–441 (2018).

Chan, M. P. S. et al. Debunking: a meta-analysis of the psychological efficacy of messages countering misinformation. Psychol. Sci. 28, 1531–1546 (2017).

Walter, N. et al. Evaluating the impact of attempts to correct health misinformation on social media: a meta-analysis. Health Commun. 36, 1776–1784 (2021).

Aikin, K. J. et al. Correction of overstatement and omission in direct-to-consumer prescription drug advertising. J. Commun. 65, 596–618 (2015).

Lewandowsky, S. et al. The Debunking Handbook 2020 https://www.climatechangecommunication.org/wp-content/uploads/2020/10/DebunkingHandbook2020.pdf (2020).

Lewandowsky, S. et al. Misinformation and its correction: continued influence and successful debiasing. Psychol. Sci. Publ. Int 13, 106–131 (2012).

Swire-Thompson, B. et al. Searching for the backfire effect: measurement and design considerations. J. Appl. Res. Mem. Cogn. 9, 286–299 (2020).

Nyhan, B. et al. Effective messages in vaccine promotion: a randomized trial. Pediatrics 133, e835–e842 (2014).

Nyhan, B. & Reifler, J. Does correcting myths about the flu vaccine work? An experimental evaluation of the effects of corrective information. Vaccine 33, 459–464 (2015).

Wood, T. & Porter, E. The elusive backfire effect: mass attitudes’ steadfast factual adherence. Polit. Behav. 41, 135–163 (2019).

Haglin, K. The limitations of the backfire effect. Res. Politics https://doi.org/10.1177/2053168017716547 (2017).

Chido-Amajuoyi et al. Exposure to court-ordered tobacco industry antismoking advertisements among US adults. JAMA Netw. Open 2, e196935 (2019).

Walter, N. & Tukachinsky, R. A meta-analytic examination of the continued influence of misinformation in the face of correction: how powerful is it, why does it happen, and how to stop it? Commun. Res 47, 155–177 (2020).

Papageorgis, D. & McGuire, W. J. The generality of immunity to persuasion produced by pre-exposure to weakened counterarguments. J. Abnorm. Psychol. 62, 475–481 (1961).

Papageorgis, D. & McGuire, W. J. The generality of immunity to persuasion produced by pre-exposure to weakened counterarguments. J. Abnorm. Psychol. 62, 475–481 (1961).

Lewandowsky, S. & van der Linden, S. Countering misinformation and fake news through inoculation and prebunking. Eur. Rev. Soc. Psychol. 32, 348–384 (2021).

Jolley, D. & Douglas, K. M. Prevention is better than cure: addressing anti vaccine conspiracy theories. J. Appl. Soc. Psychol. 47, 459–469 (2017).

Compton, J. et al. Inoculation theory in the post‐truth era: extant findings and new frontiers for contested science, misinformation, and conspiracy theories. Soc. Personal. Psychol. 15, e12602 (2021).

Banas, J. A. & Rains, S. A. A meta-analysis of research on inoculation theory. Commun. Monogr. 77, 281–311 (2010).

Compton, J. et al. Persuading others to avoid persuasion: Inoculation theory and resistant health attitudes. Front. Psychol. 7, 122 (2016).

Iles, I. A. et al. Investigating the potential of inoculation messages and self-affirmation in reducing the effects of health misinformation. Sci. Commun. 43, 768–804 (2021).

Cook et al. Neutralizing misinformation through inoculation: Exposing misleading argumentation techniques reduces their influence. PloS ONE 12, e0175799 (2017).

van der Linden, S., & Roozenbeek, J. in The Psychology of Fake News: Accepting, Sharing, and Correcting Misinformation (eds Greifeneder, R., Jaffe, M., Newman, R., & Schwarz, N.) 147–169 (Psychology Press, 2020).

Basol, M. et al. Towards psychological herd immunity: cross-cultural evidence for two prebunking interventions against COVID-19 misinformation. Big Data Soc. 8, 20539517211013868 (2021).

Sagarin, B. J. et al. Dispelling the illusion of invulnerability: the motivations and mechanisms of resistance to persuasion. J. Pers. Soc. Psychol. 83, 526–541 (2002).

van der Linden, S. et al. Inoculating the public against misinformation about climate change. Glob. Chall. 1, 1600008 (2017).

Basol, M. et al. Good news about bad news: gamified inoculation boosts confidence and cognitive immunity against fake news. J. Cogn. 3, 2 (2020).

Basol, M. et al. Good news about bad news: gamified inoculation boosts confidence and cognitive immunity against fake news. J. Cogn. 3, 2 (2020).

Roozenbeek, J., & van der Linden, S. Breaking Harmony Square: a game that ‘inoculates’ against political misinformation. The Harvard Kennedy School Misinformation Review https://doi.org/10.37016/mr-2020-47 (2020).

What is Go Viral? World Health Organization https://www.who.int/news/item/23-09-2021-what-is-go-viral (WHO, 2021).

Abbasi, J. COVID-19 conspiracies and beyond: how physicians can deal with patients’ misinformation. JAMA 325, 208–210 (2021).

Compton, J. Prophylactic versus therapeutic inoculation treatments for resistance to influence. Commun. Theory 30, 330–343 (2020).

Lazer, D. M. et al. The science of fake news. Science 359, 1094–1096 (2018).

Pennycook, G. & Rand, D. G. Who falls for fake news? The roles of bullshit receptivity, overclaiming, familiarity, and analytic thinking. J. Pers. 88, 185–200 (2020).

Benton, J. Facebook sent a ton of traffic to a Chicago Tribune story. So why is everyone mad at them? NiemanLab https://www.niemanlab.org/2021/08/facebook-sent-a-ton-of-traffic-to-a-chicago-tribune-story-so-why-is-everyone-mad-at-them/ (2021).

Poutoglidou, F. et al. Ibuprofen and COVID-19 disease: separating the myths from facts. Expert Rev. Respir. Med 15, 979–983 (2021).

Maertens, R. et al. The Misinformation Susceptibility Test (MIST): a psychometrically validated measure of news veracity discernment. Preprint at PsyArXiv https://doi.org/10.31234/osf.io/gk68h (2021).

Latest papers on complex science

Since the express column of papers in the top journals of Chi Zhi Ban Tu was launched, it has continuously collected the latest papers from top journals such as Nature and Science, and tracked the frontier progress in complex systems, network science, computational social science and other fields.

Original title: Overview of Information Epidemiology: Susceptibility, Dissemination and Immunity of False Information

Read the original text